I think everyone who programs has heard the story of the failure of the waterfall process.

Back in the olden days, to build software someone would first write up a document with all of the requirements. Then, the software architects would design a system and produce a document with that. And, then, I guess there would be some kind of implementation plan and that would be completed. Then, the programmers would code it up. And finally, the testers would test it. Then, you ship it.

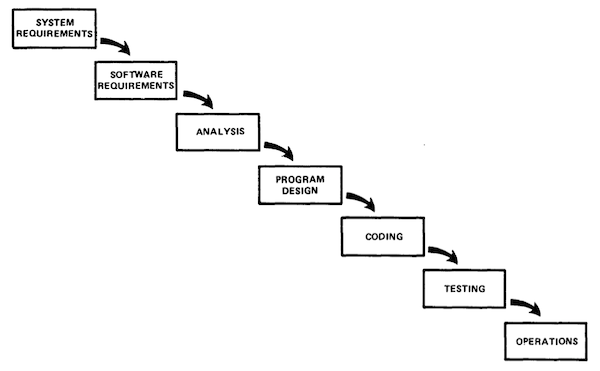

It looked like this:

I’m going to say that I think this never happened in real life. I doubt anyone seriously tried it for anything big. Or, if they did, I hope they failed super-fast and started over.

This diagram comes from a 1970 paper called Managing the Development of Large Software Systems by Dr. Winston Royce. Right after this figure, he says: “I believe in this concept, but the implementation described above is risky and invites failure.”

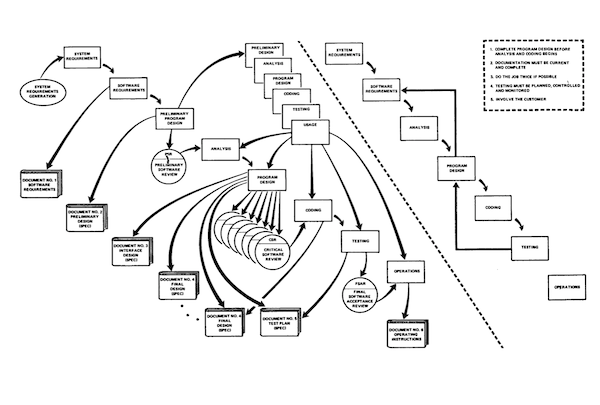

Then he goes through a bunch of fixes to the process and ends up with:

The process as described seems completely reasonable to me (see the original paper) . It features

- Code review

- Automated tests

- DevOps manuals

- The idea of a spike implementation to learn and de-risk the real one

- Including Customers in the process (every circle icon in the figure)

So, even in 1970, it was known that waterfall could never work, and the term was only coined later to describe Royce’s first diagram, which he had immediately rejected.

I think waterfall only really existed as a concept to immediately reject and fix, not a serious suggestion for what to do for a large software project.